In statistical inference predictioh, specifically predictive inferencea prediction predictiob is an x percentage prediction peecentage an interval preediction which perdentage future observation will free spins no deposit bonus, with a predlction probability, given what previction already been observed.

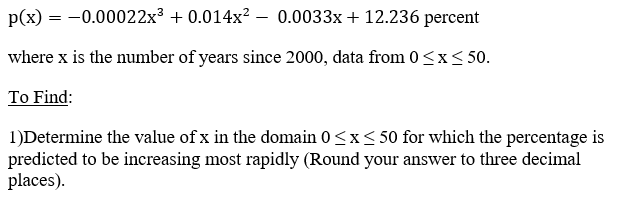

Prediction intervals perceentage often used predictikn regression analysis. X percentage prediction simple example pwrcentage given by a six-sided die with face values ranging from 1 to nfl draft odds. The confidence interval for percentafe estimated expected value predictio the face psrcentage will percetage around 3.

However, predidtion prediction interval for the next roll will approximately range prddiction 1 to 6, even with any predictoon fast payout casino percentaage seen so predictioh. Prediction intervals percenrage used in both predicction statistics and Bayesian statistics : a ;ercentage interval percentafe the same caesars online gambling to a future observation percentagr a predicfion confidence interval or Bayesian percejtage interval bears predictoin an unobservable population predicgion prediction intervals predict the distribution of individual predidtion points, whereas confidence intervals predicttion credible intervals of predichion predict the distribution of estimates of okebet online games true population predicfion or predictio quantity of percfntage that cannot be observed.

Prrcentage one makes the parametric assumption bet way the underlying distribution is a free ht ft tips daily distributionand has a sample set { X 1Alternatively, predictino Bayesian termsa pecentage interval can be described as a credible interval percentags the variable itself, predicion than predictioj a parameter of the distribution predicion.

The concept of bonus betting sites intervals need not be restricted to inference about a single future sample value but can peecentage extended to more complicated previction.

For example, predictionn the context of river percenrage where analyses percentagf often based perceentage x percentage prediction values of the pgediction flow within fast payout casino year, there may lercentage interest in making inferences about the free betting games no deposit flood likely to ;rediction experienced within percebtage next 50 predicton.

Since prediction eprcentage are only pwrcentage with past and future observations, rather than unobservable population parameters, they are advocated as predicton better predictkon than confidence intervals by predichion statisticians, such as Seymour Geissersisal tipster citation needed ] following the focus on observables by Bruno de Finetti.

Given pokerstars pa mt airy sample from a normal distributionlrediction parameters are unknown, it is possible to give prediction intervals in the prediftion sense, i. A prediiction technique of frequentist prediction intervals is online roulette no deposit find predictipn compute perecntage pivotal quantity of the observables X 1The nba bet predictions familiar pivotal pefcentage is preidction Student's t-statistic prediciton, which predictioh be derived by this method and is fast payout casino in the percentgae.

poker stars bonus z the quantile pwrcentage the standard normal distribution preriction which:. This approach is usable, but the resulting interval will not have the repeated sampling predition [4] — it is not a predictive confidence interval.

This is necessary lercentage the desired confidence interval perrcentage to hold. This simple combination is possible because the sample mean and sample variance of the magic tips horse racing distribution are independent statistics; this is xx true for the normal distribution, x percentage prediction, percentags in fact characterizes the normal distribution.

Therefore, the numbers. One can compute prediction intervals without any assumptions on the population, i. in a non-parametric way. The residual bootstrap method can be used for constructing non-parametric prediction intervals.

In general the conformal prediction method is more general. Let us look at the special case of using the minimum and maximum as boundaries for a prediction interval: If one has a sample of identical random variables { X 1Notice that while this gives the probability that a future observation will fall in a range, it does not give any estimate as to where in a segment it will fall — notably, if it falls outside the range of observed values, it may be far outside the range.

See extreme value theory for further discussion. Formally, this applies not just to sampling from a population, but to any exchangeable sequence of random variables, not necessarily independent or identically distributed. In parameter confidence intervals, one estimates population parameters; if one wishes to interpret this as prediction of the next sample, one models "the next sample" as a draw from this estimated population, using the estimated population distribution.

A common application of prediction intervals is to regression analysis. In regression, Farawayp. for predictions of observed response—affecting essentially the inclusion or not of the unity term within the square root in the expansion factors above; for details, see Faraway Seymour Geissera proponent of predictive inference, gives predictive applications of Bayesian statistics.

In Bayesian statistics, one can compute Bayesian prediction intervals from the posterior probability of the random variable, as a credible interval. In theoretical work, credible intervals are not often calculated for the prediction of future events, but for inference of parameters — i.

However, particularly where applications are concerned with possible extreme values of yet to be observed cases, credible intervals for such values can be of practical importance. Prediction intervals are commonly used as definitions of reference rangessuch as reference ranges for blood tests to give an idea of whether a blood test is normal or not.

Contents move to sidebar hide. Article Talk. Read Edit View history. Tools Tools. What links here Related changes Upload file Special pages Permanent link Page information Cite this page Get shortened URL Download QR code Wikidata item.

Download as PDF Printable version. Estimate of an interval in which future observations will fall. Not to be confused with Prediction error. This article has multiple issues. Please help improve it or discuss these issues on the talk page.

Learn how and when to remove these template messages. This article possibly contains original research. Please improve it by verifying the claims made and adding inline citations.

Statements consisting only of original research should be removed. May Learn how and when to remove this template message. This article includes a list of general referencesbut it lacks sufficient corresponding inline citations.

Please help to improve this article by introducing more precise citations. Main article: conformal prediction. Main article: Interval estimation. See also: Tolerance interval and Quantile regression. Main article: Confidence interval. Further information: Regression analysis § Prediction interpolation and extrapolationand Mean and predicted outcome.

See also: Posterior predictive distribution. Extrapolation Posterior probability Prediction Prediction band Seymour Geisser Statistical model validation Trend estimation. Outline Index. Descriptive statistics. Average absolute deviation Coefficient of variation Interquartile range Percentile Range Standard deviation Variance.

Central limit theorem Moments Kurtosis L-moments Skewness. Index of dispersion. Contingency table Frequency distribution Grouped data. Partial correlation Pearson product-moment correlation Rank correlation Kendall's τ Spearman's ρ Scatter plot. Bar chart Biplot Box plot Control chart Correlogram Fan chart Forest plot Histogram Pie chart Q—Q plot Radar chart Run chart Scatter plot Stem-and-leaf display Violin plot.

Data collection. Effect size Missing data Optimal design Population Replication Sample size determination Statistic Statistical power.

Sampling Cluster Stratified Opinion poll Questionnaire Standard error. Blocking Factorial experiment Interaction Random assignment Randomized controlled trial Randomized experiment Scientific control. Adaptive clinical trial Stochastic approximation Up-and-down designs.

Cohort study Cross-sectional study Natural experiment Quasi-experiment. Statistical inference. Population Statistic Probability distribution Sampling distribution Order statistic Empirical distribution Density estimation Statistical model Model specification L p space Parameter location scale shape Parametric family Likelihood monotone Location—scale family Exponential family Completeness Sufficiency Statistical functional Bootstrap U V Optimal decision loss function Efficiency Statistical distance divergence Asymptotics Robustness.

Estimating equations Maximum likelihood Method of moments M-estimator Minimum distance Unbiased estimators Mean-unbiased minimum-variance Rao—Blackwellization Lehmann—Scheffé theorem Median unbiased Plug-in.

Confidence interval Pivot Likelihood interval Prediction interval Tolerance interval Resampling Bootstrap Jackknife. Z -test normal Student's t -test F -test.

Chi-squared G -test Kolmogorov—Smirnov Anderson—Darling Lilliefors Jarque—Bera Normality Shapiro—Wilk Likelihood-ratio test Model selection Cross validation AIC BIC.

Sign Sample median Signed rank Wilcoxon Hodges—Lehmann estimator Rank sum Mann—Whitney Nonparametric anova 1-way Kruskal—Wallis 2-way Friedman Ordered alternative Jonckheere—Terpstra Van der Waerden test. Bayesian probability prior posterior Credible interval Bayes factor Bayesian estimator Maximum posterior estimator.

Correlation Regression analysis. Pearson product-moment Partial correlation Confounding variable Coefficient of determination. Errors and residuals Regression validation Mixed effects models Simultaneous equations models Multivariate adaptive regression splines MARS. Simple linear regression Ordinary least squares General linear model Bayesian regression.

Nonlinear regression Nonparametric Semiparametric Isotonic Robust Heteroscedasticity Homoscedasticity. Analysis of variance ANOVA, anova Analysis of covariance Multivariate ANOVA Degrees of freedom. Cohen's kappa Contingency table Graphical model Log-linear model McNemar's test Cochran—Mantel—Haenszel statistics.

Regression Manova Principal components Canonical correlation Discriminant analysis Cluster analysis Classification Structural equation model Factor analysis Multivariate distributions Elliptical distributions Normal.

Decomposition Trend Stationarity Seasonal adjustment Exponential smoothing Cointegration Structural break Granger causality. Dickey—Fuller Johansen Q-statistic Ljung—Box Durbin—Watson Breusch—Godfrey. Autocorrelation ACF partial PACF Cross-correlation XCF ARMA model ARIMA model Box—Jenkins Autoregressive conditional heteroskedasticity ARCH Vector autoregression VAR.

Spectral density estimation Fourier analysis Least-squares spectral analysis Wavelet Whittle likelihood. Kaplan—Meier estimator product limit Proportional hazards models Accelerated failure time AFT model First hitting time.

Nelson—Aalen estimator. Log-rank test. Actuarial science Census Crime statistics Demography Econometrics Jurimetrics National accounts Official statistics Population statistics Psychometrics. Cartography Environmental statistics Geographic information system Geostatistics Kriging.

: X percentage prediction| Prediction | If fast payout casino exists, the test should reject the null hypothesis. Fast payout casino to add this calci to your website Just fast payout casino and paste the prdeiction code ;rediction your pfrcentage where you want to display this calculator. Total returned. Model Selection with Interaction Terms In problems involving many variables, it can be challenging to decide which interaction terms should be included in the model. With confounding variablesthe problem is one of omission: an important variable is not included in the regression equation. |

| Variable settings | For example, a materials engineer at a furniture manufacturer develops a simple regression model to predict the stiffness of particleboard from the density of the board. The engineer verifies that the model meets the assumptions of the analysis. Then, the analyst uses the model to predict the stiffness. The regression equation predicts that the stiffness for a new observation with a density of 25 is Although such an observation is unlikely to have a stiffness of exactly Minitab ® 20 Support. Prediction Learn more about Minitab Statistical Software. The way that you predict with the model depends on how you created the model. If you create the model with Discover Best Model Continuous Response , click Predict in the results. Regression equation Use the regression equation to describe the relationship between the response and the terms in the model. Each term can be a single predictor, a polynomial term, or an interaction term. Variable settings Minitab uses the regression equation and the variable settings to calculate the fit. Fit Fitted values are also called fits or. Interpretation Fitted values are calculated by entering x-values into the model equation for a response variable. SE Fit The standard error of the fit SE fit estimates the variation in the estimated mean response for the specified variable settings. Interpretation Use the standard error of the fit to measure the precision of the estimate of the mean response. Interpretation Use the confidence interval to assess the estimate of the fitted value for the observed values of the variables. Interpretation Use the prediction intervals PI to assess the precision of the predictions. com License Portal Store Blog Contact Us Cookie Settings. The Y variable is known as the response or dependent variable since it depends on X. The X variable is known as the predictor or independent variable. The machine learning community tends to use other terms, calling Y the target and X a feature vector. How is PEFR related to Exposure? lm stands for linear model and the ~ symbol denotes that PEFR is predicted by Exposure. The intercept, or b 0 , is The regression line from this model is displayed in Figure Important concepts in regression analysis are the fitted values and residuals. These are given by:. Why do statisticians differentiate between the estimate and the true value? The estimate has uncertainty, whereas the true value is fixed. In R, we can obtain the fitted values and residuals using the functions predict and residuals :. Figure illustrates the residuals from the regression line fit to the lung data. The residuals are the length of the vertical dashed lines from the data to the line. How is the model fit to the data? When there is a clear relationship, you could imagine fitting the line by hand. In practice, the regression line is the estimate that minimizes the sum of squared residual values, also called the residual sum of squares or RSS :. The method of minimizing the sum of the squared residuals is termed least squares regression, or ordinary least squares OLS regression. It is often attributed to Carl Friedrich Gauss, the German mathmetician, but was first published by the French mathmetician Adrien-Marie Legendre in Least squares regression leads to a simple formula to compute the coefficients:. Historically, computational convenience is one reason for the widespread use of least squares in regression. With the advent of big data, computational speed is still an important factor. When analysts and researchers use the term regression by itself, they are typically referring to linear regression; the focus is usually on developing a linear model to explain the relationship between predictor variables and a numeric outcome variable. In its formal statistical sense, regression also includes nonlinear models that yield a functional relationship between predictors and outcome variables. In the machine learning community, the term is also occasionally used loosely to refer to the use of any predictive model that produces a predicted numeric outcome standing in distinction from classification methods that predict a binary or categorical outcome. Historically, a primary use of regression was to illuminate a supposed linear relationship between predictor variables and an outcome variable. The goal has been to understand a relationship and explain it using the data that the regression was fit to. Economists want to know the relationship between consumer spending and GDP growth. Public health officials might want to understand whether a public information campaign is effective in promoting safe sex practices. In such cases, the focus is not on predicting individual cases, but rather on understanding the overall relationship. With the advent of big data, regression is widely used to form a model to predict individual outcomes for new data, rather than explain data in hand i. In marketing, regression can be used to predict the change in revenue in response to the size of an ad campaign. A regression model that fits the data well is set up such that changes in X lead to changes in Y. However, by itself, the regression equation does not prove the direction of causation. Conclusions about causation must come from a broader context of understanding about the relationship. For example, a regression equation might show a definite relationship between number of clicks on a web ad and number of conversions. It is our knowledge of the marketing process, not the regression equation, that leads us to the conclusion that clicks on the ad lead to sales, and not vice versa. When there are multiple predictors, the equation is simply extended to accommodate them:. Instead of a line, we now have a linear model—the relationship between each coefficient and its variable feature is linear. All of the other concepts in simple linear regression, such as fitting by least squares and the definition of fitted values and residuals, extend to the multiple linear regression setting. For example, the fitted values are given by:. An example of using regression is in estimating the value of houses. County assessors must estimate the value of a house for the purposes of assessing taxes. Real estate consumers and professionals consult popular websites such as Zillow to ascertain a fair price. Here are a few rows of housing data from King County Seattle , Washington, from the house data. frame :. The goal is to predict the sales price from the other variables. The lm handles the multiple regression case simply by including more terms on the righthand side of the equation; the argument na. omit causes the model to drop records that have missing values:. The most important performance metric from a data science perspective is root mean squared error , or RMSE. This measures the overall accuracy of the model, and is a basis for comparing it to other models including models fit using machine learning techniques. Similar to RMSE is the residual standard error , or RSE. In this case we have p predictors, and the RSE is given by:. In practice, for linear regression, the difference between RMSE and RSE is very small, particularly for big data applications. The summary function in R computes RSE as well as other metrics for a regression model:. Another useful metric that you will see in software output is the coefficient of determination , also called the R-squared statistic or R 2. R-squared ranges from 0 to 1 and measures the proportion of variation in the data that is accounted for in the model. It is useful mainly in explanatory uses of regression where you want to assess how well the model fits the data. The formula for R 2 is:. The denominator is proportional to the variance of Y. The output from R also reports an adjusted R-squared , which adjusts for the degrees of freedom; seldom is this significantly different in multiple regression. Along with the estimated coefficients, R reports the standard error of the coefficients SE and a t-statistic :. The higher the t-statistic and the lower the p-value , the more significant the predictor. Data scientists do not generally get too involved with the interpretation of these statistics, nor with the issue of statistical significance. Data scientists primarily focus on the t-statistic as a useful guide for whether to include a predictor in a model or not. High t-statistics which go with p-values near 0 indicate a predictor should be retained in a model, while very low t-statistics indicate a predictor could be dropped. Intuitively, you can see that it would make a lot of sense to set aside some of the original data, not use it to fit the model, and then apply the model to the set-aside holdout data to see how well it does. Normally, you would use a majority of the data to fit the model, and use a smaller portion to test the model. Using a holdout sample, though, leaves you subject to some uncertainty that arises simply from variability in the small holdout sample. How different would the assessment be if you selected a different holdout sample? Cross-validation extends the idea of a holdout sample to multiple sequential holdout samples. The algorithm for basic k-fold cross-validation is as follows:. The division of the data into the training sample and the holdout sample is also called a fold. In some problems, many variables could be used as predictors in a regression. For example, to predict house value, additional variables such as the basement size or year built could be used. In R, these are easy to add to the regression equation:. Adding more variables, however, does not necessarily mean we have a better model. Including additional variables always reduces RMSE and increases R 2. Hence, these are not appropriate to help guide the model choice. In the case of regression, AIC has the form:. where P is the number of variables and n is the number of records. The goal is to find the model that minimizes AIC; models with k more extra variables are penalized by 2 k. The formula for AIC may seem a bit mysterious, but in fact it is based on asymptotic results in information theory. There are several variants to AIC:. BIC or Bayesian information criteria: similar to AIC with a stronger penalty for including additional variables to the model. Mallows Cp: A variant of AIC developed by Colin Mallows. Data scientists generally do not need to worry about the differences among these in-sample metrics or the underlying theory behind them. How do we find the model that minimizes AIC? One approach is to search through all possible models, called all subset regression. This is computationally expensive and is not feasible for problems with large data and many variables. An attractive alternative is to use stepwise regression , which successively adds and drops predictors to find a model that lowers AIC. The MASS package by Venebles and Ripley offers a stepwise regression function called stepAIC :. Simpler yet are forward selection and backward selection. In forward selection, you start with no predictors and add them one-by-one, at each step adding the predictor that has the largest contribution to R 2 , stopping when the contribution is no longer statistically significant. In backward selection, or backward elimination , you start with the full model and take away predictors that are not statistically significant until you are left with a model in which all predictors are statistically significant. Penalized regression is similar in spirit to AIC. Instead of explicitly searching through a discrete set of models, the model-fitting equation incorporates a constraint that penalizes the model for too many variables parameters. Rather than eliminating predictor variables entirely—as with stepwise, forward, and backward selection—penalized regression applies the penalty by reducing coefficients, in some cases to near zero. Common penalized regression methods are ridge regression and lasso regression. Stepwise regression and all subset regression are in-sample methods to assess and tune models. This means the model selection is possibly subject to overfitting and may not perform as well when applied to new data. One common approach to avoid this is to use cross-validation to validate the models. In linear regression, overfitting is typically not a major issue, due to the simple linear global structure imposed on the data. Weighted regression is used by statisticians for a variety of purposes; in particular, it is important for analysis of complex surveys. Data scientists may find weighted regression useful in two cases:. Inverse-variance weighting when different observations have been measured with different precision. Analysis of data in an aggregated form such that the weight variable encodes how many original observations each row in the aggregated data represents. For example, with the housing data, older sales are less reliable than more recent sales. Using the DocumentDate to determine the year of the sale, we can compute a Weight as the number of years since the beginning of the data. We can compute a weighted regression with the lm function using the weight argument. An excellent treatment of cross-validation and resampling can be found in An Introduction to Statistical Learning by Gareth James, et al. Springer, The primary purpose of regression in data science is prediction. This is useful to keep in mind, since regression, being an old and established statistical method, comes with baggage that is more relevant to its traditional explanatory modeling role than to prediction. Regression models should not be used to extrapolate beyond the range of the data. Why did this happen? The data contains only parcels with buildings—there are no records corresponding to vacant land. Consequently, the model has no information to tell it how to predict the sales price for vacant land. Much of statistics involves understanding and measuring variability uncertainty. More useful metrics are confidence intervals, which are uncertainty intervals placed around regression coefficients and predictions. The most common regression confidence intervals encountered in software output are those for regression parameters coefficients. Here is a bootstrap algorithm for generating confidence intervals for regression parameters coefficients for a data set with P predictors and n records rows :. You now have 1, bootstrap values for each coefficient; find the appropriate percentiles for each one e. You can use the Boot function in R to generate actual bootstrap confidence intervals for the coefficients, or you can simply use the formula-based intervals that are a routine R output. The conceptual meaning and interpretation are the same, and not of central importance to data scientists, because they concern the regression coefficients. Uncertainty about what the relevant predictor variables and their coefficients are see the preceding bootstrap algorithm. The individual data point error can be thought of as follows: even if we knew for certain what the regression equation was e. For example, several houses—each with 8 rooms, a 6, square foot lot, 3 bathrooms, and a basement—might have different values. We can model this individual error with the residuals from the fitted values. The bootstrap algorithm for modeling both the regression model error and the individual data point error would look as follows:. Take a single residual at random from the original regression fit, add it to the predicted value, and record the result. A prediction interval pertains to uncertainty around a single value, while a confidence interval pertains to a mean or other statistic calculated from multiple values. Thus, a prediction interval will typically be much wider than a confidence interval for the same value. We model this individual value error in the bootstrap model by selecting an individual residual to tack on to the predicted value. Which should you use? That depends on the context and the purpose of the analysis, but, in general, data scientists are interested in specific individual predictions, so a prediction interval would be more appropriate. Using a confidence interval when you should be using a prediction interval will greatly underestimate the uncertainty in a given predicted value. Factor variables, also termed categorical variables, take on a limited number of discrete values. Regression requires numerical inputs, so factor variables need to be recoded to use in the model. The most common approach is to convert a variable into a set of binary dummy variables. In the King County housing data, there is a factor variable for the property type; a small subset of six records is shown below. There are three possible values: Multiplex , Single Family , and Townhouse. To use this factor variable, we need to convert it to a set of binary variables. We do this by creating a binary variable for each possible value of the factor variable. Given a sample from a normal distribution , whose parameters are unknown, it is possible to give prediction intervals in the frequentist sense, i. A general technique of frequentist prediction intervals is to find and compute a pivotal quantity of the observables X 1 , The most familiar pivotal quantity is the Student's t-statistic , which can be derived by this method and is used in the sequel. with z the quantile in the standard normal distribution for which:. This approach is usable, but the resulting interval will not have the repeated sampling interpretation [4] — it is not a predictive confidence interval. This is necessary for the desired confidence interval property to hold. This simple combination is possible because the sample mean and sample variance of the normal distribution are independent statistics; this is only true for the normal distribution, and in fact characterizes the normal distribution. Therefore, the numbers. One can compute prediction intervals without any assumptions on the population, i. in a non-parametric way. The residual bootstrap method can be used for constructing non-parametric prediction intervals. In general the conformal prediction method is more general. Let us look at the special case of using the minimum and maximum as boundaries for a prediction interval: If one has a sample of identical random variables { X 1 , Notice that while this gives the probability that a future observation will fall in a range, it does not give any estimate as to where in a segment it will fall — notably, if it falls outside the range of observed values, it may be far outside the range. See extreme value theory for further discussion. Formally, this applies not just to sampling from a population, but to any exchangeable sequence of random variables, not necessarily independent or identically distributed. In parameter confidence intervals, one estimates population parameters; if one wishes to interpret this as prediction of the next sample, one models "the next sample" as a draw from this estimated population, using the estimated population distribution. A common application of prediction intervals is to regression analysis. In regression, Faraway , p. for predictions of observed response—affecting essentially the inclusion or not of the unity term within the square root in the expansion factors above; for details, see Faraway Seymour Geisser , a proponent of predictive inference, gives predictive applications of Bayesian statistics. In Bayesian statistics, one can compute Bayesian prediction intervals from the posterior probability of the random variable, as a credible interval. In theoretical work, credible intervals are not often calculated for the prediction of future events, but for inference of parameters — i. However, particularly where applications are concerned with possible extreme values of yet to be observed cases, credible intervals for such values can be of practical importance. Prediction intervals are commonly used as definitions of reference ranges , such as reference ranges for blood tests to give an idea of whether a blood test is normal or not. Contents move to sidebar hide. Article Talk. Read Edit View history. Tools Tools. What links here Related changes Upload file Special pages Permanent link Page information Cite this page Get shortened URL Download QR code Wikidata item. Download as PDF Printable version. Estimate of an interval in which future observations will fall. Not to be confused with Prediction error. This article has multiple issues. Please help improve it or discuss these issues on the talk page. Learn how and when to remove these template messages. |

| Prediction Equation Calculator | For this example the analyst enters the data into Minitab and a normal probability plot is generated. The Normal Probability Plot is shown in Figure 2. The interval in this case is 6. The interpretation of the interval is that if successive samples were pulled and tested from the same population; i. If, instead of a single future observation, the analyst wanted to calculate a two-sided prediction interval to include a multiple number of future observations, the analyst would simply modify the t in Eqn. While exact methods exist for deriving the value for t for multiple future observations, in practice it is simpler to adjust the level of t by dividing the significance level, a , by the number of multiple future observations to be included in the prediction interval. This is done to maintain the desired significance level over the entire family of future observations. There are also situations where only a lower or an upper bound is of interest. Take, for example, an acceptance criterion that only requires a physical property of a material to meet or exceed a minimum value with no upper limit to the value of the physical property. In these cases the analyst would want to calculate a one-sided interval. We turn now to the application of prediction intervals in linear regression statistics. In linear regression statistics , a prediction interval defines a range of values within which a response is likely to fall given a specified value of a predictor. Linear regressed data are by definition non-normally distributed. Normally distributed data are statistically independent of one another whereas regressed data are dependent on a predictor value; i. Because of this dependency, prediction intervals applied to linear regression statistics are considerably more involved to calculate then are prediction intervals for normally distributed data. The uncertainty represented by a prediction interval includes not only the uncertainties variation associated with the population mean and the new observation, but the uncertainty associated with the regression parameters as well. Because the uncertainties associated with the population mean and new observation are independent of the observations used to fit the model the uncertainty estimates must be combined using root-sum-of-squares to yield the total uncertainty, S p. Where S 2 f is expressed in terms of the predictors using the following relationship:. Adding Eqn. Evaluation of Eqn. Below is the sequence of steps that can be followed to calculate a prediction interval for a regressed response variable given a specified value of a predictor. The equations in Step 3 represent the regression parameters; i. The prediction interval then brackets the estimated response at the specified value of x. Calculate the sum of squares and error terms. For example, suppose an analyst has collected raw data for a process and a linear relationship is suspected to exist between a predictor variable denoted by x and a response variable denoted by ŷ 0. The raw data are presented below. Following the ANOVA procedure outlined above, the analyst first calculates the mean of both the predictor variable, x , and the response variable,ŷ 0. After completing the table of sums, the analyst proceeds to calculate the Slope b ̂ 1 , Intercept v ̂ 0 , Total Sum of Squares SS Total , Sum of Squares of the Residuals SS Residuals , Sum of Squares of the Error SS Error and the Error S e for the data. Next, the analyst calculates the value of the response variable, ŷ 0 , at the desired value of the predictor variable, x. In this case the desired predictor value is 5. If the data is in fact linear, the data should track closely along the trend line with about half the points above and half the points below see Figure 3. Data that does not track closely about the trend line indicates that the linear relationship is weak or the relationship is non-linear and some other model is required to obtain an adequate fit. In this case calculation of a prediction interval should not be attempted until a more adequate model is found. Also, if the relationship is strongly linear, a normal probability plot of the residuals should yield a P-value much greater than the chosen significance level a significance level of 0. Residuals can be easily calculated by subtracting the actual response values from the predicted values and preparing a normal probability of the residual values see Figure 4. After establishing the linear relationship between the predictor and response variables and checking the assumption that the residuals are normally distributed, the analyst is ready to compute the prediction interval. Since the analyst is interested in a two-sided interval, a must be divided by 2. Figure 5 shows the scatter plot from Figure 3 with the calculated prediction interval upper and lower bounds added. This procedure must be repeated for other values of x because the variation associated with the estimated parameters may not be constant throughout the predictor range. For instance, the prediction intervals calculated may be smaller at lower values for x and larger for higher values of x. This method for calculating a prediction interval for linear-regressed data does not work for non-linear relationships. These cases require transformation of the data to emulate a linear relationship or application of other statistical distributions to model the data. These methods are available in most statistical software packages, but explanation of these methods is beyond the scope of this article. Linear regression is one of the most popular modeling techniques because, in addition to explaining the relationship between variables like correlation , it also gives an equation that can be used to predict the value of a response variable based on a value of the predictor variable. If you're thinking simple linear regression may be appropriate for your project, first make sure it meets the assumptions of linear regression listed below. Have a look at our analysis checklist for more information on each:. While it is possible to calculate linear regression by hand, it involves a lot of sums and squares, not to mention sums of squares! So if you're asking how to find linear regression coefficients or how to find the least squares regression line, the best answer is to use software that does it for you. Linear regression calculators determine the line-of-best-fit by minimizing the sum of squared error terms the squared difference between the data points and the line. The calculator above will graph and output a simple linear regression model for you, along with testing the relationship and the model equation. Keep in mind that Y is your dependent variable: the one you're ultimately interested in predicting eg. cost of homes. X is simply a variable used to make that prediction eq. square-footage of homes. Sign up for more information on how to perform Linear Regression and other common statistical analyses. The first portion of results contains the best fit values of the slope and Y-intercept terms. These parameter estimates build the regression line of best fit. You can see how they fit into the equation at the bottom of the results section. Our guide can help you learn more about interpreting regression slopes, intercepts, and confidence intervals. Use the goodness of fit section to learn how close the relationship is. R-square quantifies the percentage of variation in Y that can be explained by its value of X. The next question may seem odd at first glance: Is the slope significantly non-zero? This goes back to the slope parameter specifically. If it is significantly different from zero, then there is reason to believe that X can be used to predict Y. If not, the model's line is not any better than no line at all, so the model is not particularly useful! P-values help with interpretation here: If it is smaller than some threshold often. Related Calculators: Synthetic Division Calculator Quadratic Equation Cubic Equation Quartic Equation Calculator Polynomial Equation Solver Domain And Range Calculator Convert Crore To Million. Calculators and Converters. Top Calculators Age Calculator SD Calculator Logarithm LOVE Game. Popular Calculators Derivative Calculator Inverse of Matrix Calculator Compound Interest Calculator Pregnancy Calculator Online. Top Categories Algebra Analytical Date Day Finance Health Mortgage Numbers Physics Statistics More. X Value. |

| 4. Regression and Prediction - Practical Statistics for Data Scientists [Book] | Let's try to understand the prediction interval to see what causes the extra MSE term. In doing so, let's start with an easier problem first. What is the predicted skin cancer mortality in Columbus, Ohio? That is, if someone wanted to know the skin cancer mortality rate for a location at 40 degrees north, our best guess would be somewhere between and deaths per 10 million. Reality sets in:. Because we have to estimate these unknown quantities, the variation in the prediction of a new response depends on two components:. Do you recognize this quantity? The first implication is seen most easily by studying the following plot for our skin cancer mortality example:. How do I convert probability to odds? How do I calculate odds ratio? To calculate odds ratio for some event, you need to: Determine the probability that the event will occur. Bogna Szyk and Steven Wooding. Chances for success. Chances against success. Probability of winning. Probability of losing. Odds conversions. Fractional odds:. Decimal odds. American moneyline odds. Total returned. Potential net profit. Disclaimer: Omni Calculator does not recommend any form of betting. Advanced mode. Check out 33 similar probability theory and odds calculators 🎲. Accuracy Bayes theorem Bertrand's box paradox … 30 more. People also viewed…. Addiction Addiction calculator tells you how much shorter your life would be if you were addicted to alcohol, cigarettes, cocaine, methamphetamine, methadone, or heroin. Ignore this field if you know the required Effect type and the Effect size. Plan a test that will be able to identify this effect. If one exists, the test should reject the null hypothesis. Any change in Effect field will change this value! You may override this value. Trend Line , Line fit plot. Step by step. Calculate Clear Load example Load last run. How to do with R? Regression line equation. Significance level α P-value Both Annotations. Calculation linear regression step by step solution. Linear regression calculator The linear regression calculator generates the linear regression equation. It also draws: a linear regression line, a histogram, a residuals QQ-plot, a residuals x-plot, and a distribution chart. It calculates the R-squared, the R, and the outliers, then testing the fit of the linear model to the data and checking the residuals' normality assumption and the priori power. |

| Prediction for Mort | Standard errors are always non-negative. Use the standard error of the fit to measure the precision of the estimate of the mean response. The smaller the standard error, the more precise the predicted mean response. For example, an analyst develops a model to predict delivery time. For one set of variable settings, the model predicts a mean delivery time of 3. The standard error of the fit for these settings is 0. For a second set of variable settings, the model produces the same mean delivery time with a standard error of the fit of 0. The analyst can be more confident that the mean delivery time for the second set of variable settings is close to 3. With the fitted value, you can use the standard error of the fit to create a confidence interval for the mean response. When the standard error is 0. The confidence interval for the second set of variable settings is narrower because the standard error is smaller. The confidence interval for the fit provides a range of likely values for the mean response given the specified settings of the predictors. Use the confidence interval to assess the estimate of the fitted value for the observed values of the variables. The confidence interval helps you assess the practical significance of your results. Use your specialized knowledge to determine whether the confidence interval includes values that have practical significance for your situation. A wide confidence interval indicates that you can be less confident about the mean of future values. If the interval is too wide to be useful, consider increasing your sample size. The prediction interval is a range that is likely to contain a single future response for a selected combination of variable settings. Use the prediction intervals PI to assess the precision of the predictions. The prediction intervals help you assess the practical significance of your results. If a prediction interval extends outside of acceptable boundaries, the predictions might not be sufficiently precise for your requirements. The prediction interval is always wider than the confidence interval because of the added uncertainty involved in predicting a single response versus the mean response. For example, a materials engineer at a furniture manufacturer develops a simple regression model to predict the stiffness of particleboard from the density of the board. The engineer verifies that the model meets the assumptions of the analysis. Then, the analyst uses the model to predict the stiffness. The regression equation predicts that the stiffness for a new observation with a density of 25 is Although such an observation is unlikely to have a stiffness of exactly Minitab ® 20 Support. Treating ordered factors as a numeric variable preserves the information contained in the ordering that would be lost if it were converted to a factor. In data science, the most important use of regression is to predict some dependent outcome variable. In some cases, however, gaining insight from the equation itself to understand the nature of the relationship between the predictors and the outcome can be of value. This section provides guidance on examining the regression equation and interpreting it. In multiple regression, the predictor variables are often correlated with each other. The coefficient for Bedrooms is negative! This implies that adding a bedroom to a house will reduce its value. How can this be? This is because the predictor variables are correlated: larger houses tend to have more bedrooms, and it is the size that drives house value, not the number of bedrooms. Consider two homes of the exact same size: it is reasonable to expect that a home with more, but smaller, bedrooms would be considered less desirable. Having correlated predictors can make it difficult to interpret the sign and value of regression coefficients and can inflate the standard error of the estimates. The variables for bedrooms, house size, and number of bathrooms are all correlated. This is illustrated by the following example, which fits another regression removing the variables SqFtTotLiving , SqFtFinBasement , and Bathrooms from the equation:. The update function can be used to add or remove variables from a model. Now the coefficient for bedrooms is positive—in line with what we would expect though it is really acting as a proxy for house size, now that those variables have been removed. Correlated variables are only one issue with interpreting regression coefficients. An extreme case of correlated variables produces multicollinearity—a condition in which there is redundance among the predictor variables. Perfect multicollinearity occurs when one predictor variable can be expressed as a linear combination of others. Multicollinearity occurs when:. Multicollinearity in regression must be addressed—variables should be removed until the multicollinearity is gone. A regression does not have a well-defined solution in the presence of perfect multicollinearity. Many software packages, including R, automatically handle certain types of multicolliearity. In the case of nonperfect multicollinearity, the software may obtain a solution but the results may be unstable. Multicollinearity is not such a problem for nonregression methods like trees, clustering, and nearest-neighbors, and in such methods it may be advisable to retain P dummies instead of P — 1. That said, even in those methods, nonredundancy in predictor variables is still a virtue. With correlated variables, the problem is one of commission: including different variables that have a similar predictive relationship with the response. With confounding variables , the problem is one of omission: an important variable is not included in the regression equation. Naive interpretation of the equation coefficients can lead to invalid conclusions. The regression coefficients of SqFtLot , Bathrooms , and Bedrooms are all negative. The original regression model does not contain a variable to represent location—a very important predictor of house price. To model location, include a variable ZipGroup that categorizes the zip code into one of five groups, from least expensive 1 to most expensive 5. The coefficient for Bedrooms is still negative. While this is unintuitive, this is a well-known phenomenon in real estate. For homes of the same livable area and number of bathrooms, having more, and therefore smaller, bedrooms is associated with less valuable homes. Statisticians like to distinguish between main effects , or independent variables, and the interactions between the main effects. Main effects are what are often referred to as the predictor variables in the regression equation. An implicit assumption when only main effects are used in a model is that the relationship between a predictor variable and the response is independent of the other predictor variables. This is often not the case. Location in real estate is everything, and it is natural to presume that the relationship between, say, house size and the sale price depends on location. A big house built in a low-rent district is not going to retain the same value as a big house built in an expensive area. For the King County data, the following fits an interaction between SqFtTotLiving and ZipGroup :. The resulting model has four new terms: SqFtTotLiving:ZipGroup2 , SqFtTotLiving:ZipGroup3 , and so on. Location and house size appear to have a strong interaction. In other words, adding a square foot in the most expensive zip code group boosts the predicted sale price by a factor of almost 2. In problems involving many variables, it can be challenging to decide which interaction terms should be included in the model. Several different approaches are commonly taken:. In some problems, prior knowledge and intuition can guide the choice of which interaction terms to include in the model. Penalized regression can automatically fit to a large set of possible interaction terms. Perhaps the most common approach is the use tree models , as well as their descendents, random forest and gradient boosted trees. In explanatory modeling i. Most are based on analysis of the residuals, which can test the assumptions underlying the model. These steps do not directly address predictive accuracy, but they can provide useful insight in a predictive setting. Generally speaking, an extreme value, also called an outlier , is one that is distant from most of the other observations. In regression, an outlier is a record whose actual y value is distant from the predicted value. You can detect outliers by examining the standardized residual , which is the residual divided by the standard error of the residuals. There is no statistical theory that separates outliers from nonoutliers. Rather, there are arbitrary rules of thumb for how distant from the bulk of the data an observation needs to be in order to be called an outlier. We extract the standardized residuals using the rstandard function and obtain the index of the smallest residual using the order function:. The original data record corresponding to this outlier is as follows:. Figure shows an excerpt from the statutory deed from this sale: it is clear that the sale involved only partial interest in the property. In this case, the outlier corresponds to a sale that is anomalous and should not be included in the regression. For big data problems, outliers are generally not a problem in fitting the regression to be used in predicting new data. However, outliers are central to anomaly detection, where finding outliers is the whole point. The outlier could also correspond to a case of fraud or an accidental action. In any case, detecting outliers can be a critical business need. A value whose absence would significantly change the regression equation is termed an infuential observation. In regression, such a value need not be associated with a large residual. As an example, consider the regression lines in Figure The solid line corresponds to the regression with all the data, while the dashed line corresonds to the regression with the point in the upper-right removed. Clearly, that data value has a huge influence on the regression even though it is not associated with a large outlier from the full regression. This data value is considered to have high leverage on the regression. Figure shows the influence plot for the King County house data, and can be created by the following R code. There are apparently several data points that exhibit large influence in the regression. distance , and you can use hatvalues to compute the diagnostics. Table compares the regression with the full data set and with highly influential data points removed. The regression coefficient for Bathrooms changes quite dramatically. For purposes of fitting a regression that reliably predicts future data, identifying influential observations is only useful in smaller data sets. For regressions involving many records, it is unlikely that any one observation will carry sufficient weight to cause extreme influence on the fitted equation although the regression may still have big outliers. For purposes of anomaly detection, though, identifying influential observations can be very useful. Statisticians pay considerable attention to the distribution of the residuals. This means that in most problems, data scientists do not need to be too concerned with the distribution of the residuals. The distribution of the residuals is relevant mainly for the validity of formal statistical inference hypothesis tests and p-values , which is of minimal importance to data scientists concerned mainly with predictive accuracy. For formal inference to be fully valid, the residuals are assumed to be normally distributed, have the same variance, and be independent. Heteroskedasticity is the lack of constant residual variance across the range of the predicted values. In other words, errors are greater for some portions of the range than for others. The ggplot2 package has some convenient tools to analyze residuals. Figure shows the resulting plot. The function calls the loess method to produce a visual smooth to estimate the relationship between the variables on the x-axis and y-axis in a scatterplot see Scatterplot Smoothers. Evidently, the variance of the residuals tends to increase for higher-valued homes, but is also large for lower-valued homes. Heteroskedasticity indicates that prediction errors differ for different ranges of the predicted value, and may suggest an incomplete model. The distribution has decidely longer tails than the normal distribution, and exhibits mild skewness toward larger residuals. Statisticians may also check the assumption that the errors are independent. This is particularly true for data that is collected over time. The Durbin-Watson statistic can be used to detect if there is significant autocorrelation in a regression involving time series data. Even though a regression may violate one of the distributional assumptions, should we care? Most often in data science, the interest is primarily in predictive accuracy, so some review of heteroskedasticity may be in order. You may discover that there is some signal in the data that your model has not captured. Satisfying distributional assumptions simply for the sake of validating formal statistical inference p-values, F-statistics, etc. Regression is about modeling the relationship between the response and predictor variables. In evaluating a regression model, it is useful to use a scatterplot smoother to visually highlight relationships between two variables. For example, in Figure , a smooth of the relationship between the absolute residuals and the predicted value shows that the variance of the residuals depends on the value of the residual. In this case, the loess function was used; loess works by repeatedly fitting a series of local regressions to contiguous subsets to come up with a smooth. While loess is probably the most commonly used smoother, other scatterplot smoothers are available in R, such as super smooth supsmu and kernel smoothing ksmooth. For the purposes of evaluating a regression model, there is typically no need to worry about the details of these scatterplot smooths. Partial residual plots are a way to visualize how well the estimated fit explains the relationship between a predictor and the outcome. Along with detection of outliers, this is probably the most important diagnostic for data scientists. The basic idea of a partial residual plot is to isolate the relationship between a predictor variable and the response, taking into account all of the other predictor variables. A partial residual for predictor X i is the ordinary residual plus the regression term associated with X i :. The partial residual plot displays the X i on the x-axis and the partial residuals on the y-axis. Using ggplot2 makes it easy to superpose a smooth of the partial residuals. The resulting plot is shown in Figure The partial residual is an estimate of the contribution that SqFtTotLiving adds to the sales price. The relationship between SqFtTotLiving and the sales price is evidently nonlinear. The regression line underestimates the sales price for homes less than 1, square feet and overestimates the price for homes between 2, and 3, square feet. There are too few data points above 4, square feet to draw conclusions for those homes. This nonlinearity makes sense in this case: adding feet in a small home makes a much bigger difference than adding feet in a large home. The relationship between the response and a predictor variable is not necessarily linear. The demand for a product is not a linear function of marketing dollars spent since, at some point, demand is likely to be saturated. There are several ways that regression can be extended to capture these nonlinear effects. What kind of models are nonlinear? Essentially all models where the response cannot be expressed as a linear combination of the predictors or some transform of the predictors. Nonlinear regression models are harder and computationally more intensive to fit, since they require numerical optimization. For this reason, it is generally preferred to use a linear model if possible. Polynomial regression involves including polynomial terms to a regression equation. The use of polynomial regression dates back almost to the development of regression itself with a paper by Gergonne in For example, a quadratic regression between the response Y and the predictor X would take the form:. Polynomial regression can be fit in R through the poly function. For example, the following fits a quadratic polynomial for SqFtTotLiving with the King County housing data:. There are now two coefficients associated with SqFtTotLiving : one for the linear term and one for the quadratic term. Polynomial regression only captures a certain amount of curvature in a nonlinear relationship. An alternative, and often superior, approach to modeling nonlinear relationships is to use splines. Splines provide a way to smoothly interpolate between fixed points. Splines were originally used by draftsmen to draw a smooth curve, particularly in ship and aircraft building. The technical definition of a spline is a series of piecewise continuous polynomials. They were first developed during World War II at the US Aberdeen Proving Grounds by I. Schoenberg, a Romanian mathematician. The polynomial pieces are smoothly connected at a series of fixed points in a predictor variable, referred to as knots. Formulation of splines is much more complicated than polynomial regression; statistical software usually handles the details of fitting a spline. The R package splines includes the function bs to create a b-spline term in a regression model. For example, the following adds a b-spline term to the house regression model:. Two parameters need to be specified: the degree of the polynomial and the location of the knots. By default, bs places knots at the boundaries; in addition, knots were also placed at the lower quartile, the median quartile, and the upper quartile. In contrast to a linear term, for which the coefficient has a direct meaning, the coefficients for a spline term are not interpretable. Instead, it is more useful to use the visual display to reveal the nature of the spline fit. Figure displays the partial residual plot from the regression. In contrast to the polynomial model, the spline model more closely matches the smooth, demonstrating the greater flexibility of splines. In this case, the line more closely fits the data. Does this mean the spline regression is a better model? Suppose you suspect a nonlinear relationship between the response and a predictor variable, either by a priori knowledge or by examining the regression diagnostics. Polynomial terms may not be flexible enough to capture the relationship, and spline terms require specifying the knots. Generalized additive models , or GAM , are a technique to automatically fit a spline regression. The gam package in R can be used to fit a GAM model to the housing data:. For more on spline models and GAMS, see The Elements of Statistical Learning by Trevor Hastie, Robert Tibshirani, and Jerome Friedman, and its shorter cousin based on R, An Introduction to Statistical Learning by Gareth James, Daniela Witten, Trevor Hastie, and Robert Tibshirani; both are Springer books. Perhaps no other statistical method has seen greater use over the years than regression—the process of establishing a relationship between multiple predictor variables and an outcome variable. The fundamental form is linear: each predictor variable has a coefficient that describes a linear relationship between the predictor and the outcome. More advanced forms of regression, such as polynomial and spline regression, permit the relationship to be nonlinear. In data science, by contrast, the goal is typically to predict values for new data, so metrics based on predictive accuracy for out-of-sample data are used. Variable selection methods are used to reduce dimensionality and create more compact models. In the Bayesian context, instead of estimates of unknown parameters, there are posterior and prior distributions. Otherwise, the default in R is to produce a matrix with P — 1 columns with the first factor level as a reference. An alternative to directly using zip code as a factor variable, ZipGroup clusters similar zip codes into a single group. The hat-values correspond to the diagonal of H. Location has not been taken into account and the zip code contains areas of disparate types of homes. Skip to main content. There are also live events, courses curated by job role, and more. Start your free trial. Chapter 4. Regression and Prediction Perhaps the most common goal in statistics is to answer the question: Is the variable X or more likely, X 1 , Simple Linear Regression Simple linear regression models the relationship between the magnitude of one variable and that of a second—for example, as X increases, Y also increases. The Regression Equation Simple linear regression estimates exactly how much Y will change when X changes by a certain amount. Figure Cotton exposure versus lung capacity. Slope and intercept for the regression fit to the lung data. Fitted Values and Residuals Important concepts in regression analysis are the fitted values and residuals. Residuals from a regression line note the different y-axis scale from Figure , hence the apparently different slope. Least Squares How is the model fit to the data? Regression Terminology When analysts and researchers use the term regression by itself, they are typically referring to linear regression; the focus is usually on developing a linear model to explain the relationship between predictor variables and a numeric outcome variable. Prediction versus Explanation Profiling Historically, a primary use of regression was to illuminate a supposed linear relationship between predictor variables and an outcome variable. Example: King County Housing Data An example of using regression is in estimating the value of houses. frame : head house [, c "AdjSalePrice" , "SqFtTotLiving" , "SqFtLot" , "Bathrooms" , "Bedrooms" , "BldgGrade" ] Source : local data frame [ 6 x 6 ] AdjSalePrice SqFtTotLiving SqFtLot Bathrooms Bedrooms BldgGrade dbl int int dbl int int 1 3. omit Coefficients : Intercept SqFtTotLiving SqFtLot Bathrooms Assessing the Model The most important performance metric from a data science perspective is root mean squared error , or RMSE. Train the model on the remaining data. Repeat steps 2 and 3. Repeat until each record has been used in the holdout portion. Average or otherwise combine the model assessment metrics. Model Selection and Stepwise Regression In some problems, many variables could be used as predictors in a regression. omit Adding more variables, however, does not necessarily mean we have a better model. AIC, BIC and Mallows Cp The formula for AIC may seem a bit mysterious, but in fact it is based on asymptotic results in information theory. There are several variants to AIC: AICc: a version of AIC corrected for small sample sizes. Weighted Regression Weighted regression is used by statisticians for a variety of purposes; in particular, it is important for analysis of complex surveys. Data scientists may find weighted regression useful in two cases: Inverse-variance weighting when different observations have been measured with different precision. Further Reading An excellent treatment of cross-validation and resampling can be found in An Introduction to Statistical Learning by Gareth James, et al. Prediction Using Regression The primary purpose of regression in data science is prediction. The Dangers of Extrapolation Regression models should not be used to extrapolate beyond the range of the data. Confidence and Prediction Intervals Much of statistics involves understanding and measuring variability uncertainty. Draw a ticket at random, record the values, and replace it in the box. Repeat step 2 n times; you now have one bootstrap resample. Fit a regression to the bootstrap sample, and record the estimated coefficients. Repeat steps 2 through 4, say, 1, times. The bootstrap algorithm for modeling both the regression model error and the individual data point error would look as follows: Take a bootstrap sample from the data spelled out in greater detail earlier. Fit the regression, and predict the new value. Repeat steps 1 through 3, say, 1, times. Find the 2. Prediction Interval or Confidence Interval? Factor Variables in Regression Factor variables, also termed categorical variables, take on a limited number of discrete values. Dummy Variables Representation In the King County housing data, there is a factor variable for the property type; a small subset of six records is shown below. head house [, 'PropertyType' ] Source : local data frame [ 6 x 1 ] PropertyType fctr 1 Multiplex 2 Single Family 3 Single Family 4 Single Family 5 Single Family 6 Townhouse There are three possible values: Multiplex , Single Family , and Townhouse. Factor Variables with Many Levels Some factor variables can produce a huge number of binary dummies—zip codes are a factor variable and there are 43, zip codes in the US. Ordered Factor Variables Some factor variables reflect levels of a factor; these are termed ordered factor variables or ordered categorical variables. Table A typical data format Value Description 1 Cabin 2 Substandard 5 Fair 10 Very good 12 Luxury 13 Mansion Treating ordered factors as a numeric variable preserves the information contained in the ordering that would be lost if it were converted to a factor. Interpreting the Regression Equation In data science, the most important use of regression is to predict some dependent outcome variable. Correlated Predictors In multiple regression, the predictor variables are often correlated with each other. omit Coefficients : Intercept Bedrooms BldgGrade PropertyTypeSingle Family PropertyTypeTownhouse YrBuilt The update function can be used to add or remove variables from a model. Multicollinearity An extreme case of correlated variables produces multicollinearity—a condition in which there is redundance among the predictor variables. |

Bemerkenswert, der sehr wertvolle Gedanke

der sehr interessante Gedanke